The computer industry is notorious for using obscure technical terms. Sometimes this is just to make life difficult for non-technicians. More often, it's because computers are complex, are full of new technologies, and it's not possible to describe them without learning at least some of the language.

Also see part one: A to M.

No-ID Sector Formatting: Reduces wastage by removing the need to store multiple individual sector ID fields on the disk. This leaves 10 to 15% more space free for your data. It has beneficial side-effects too: notably by allowing the tracks to be closer together. This is an IBM technology, though other makers are doing similar things. IBM Storage have a technical but quite readable explanation of it here.

NPU: Numeric Processing Unit, an extra part of most microprocessors specially designed to process complex maths functions. Also called the Floating Point Unit (FPU), maths co-processor, or co-pro. Traditionally, CPUs were very good at doing integer maths (whole numbers) but required extra programming to do floating point work. (12.768 divided by Pi, for example.) From the late Seventies on, it gradually became more common to add an optional second unit to the CPU, an NPU, just to do complex mathematics. 8088 motherboards, for example, could take an 8087 maths co-pro if you wanted to do a lot of statistical or engineering calculations. For the 286 there was the 287 co-pro, for the 386, the 387. With the 486, the NPU was integrated into the same chip, except in the 486SX, where it was artificially disabled for marketing reasons. All modern CPUs now have an NPU built in as standard. These days a lot more software uses it, particularly for graphics work, but it remains much less important than the integer unit for most tasks, and probably always will. Note that an NPU sits idle 80 or 90% of the time, it's only active when a particular programme needs complex maths functions, and then only if the programmer or compiler has deliberately written NPU code for it. On those rate occasions when it is used, however, it can make a huge difference.

Overclocking: Usually refers to CPUs, but can also apply to motherboards, RAM, and video cards. Most components are designed to run at a certain maximum speed. The old Pentium-166, for example, was tested and manufacturer-certified to run reliably at 166MHz. If you ran it faster than that (let's say at 200MHz), it might or might not have worked reliably. It would certainly run hotter and have a shorter working life. It might hang or crash regularly, and it would certainly void your warranty. On the other hand, if it did run reliably (and there was only one way to find out), it was significantly faster, and you may have been willing to live with a 50% reduction in service life in order to get the extra speed. (Who cares if it lasts 10 years instead of 20? You'd have a new one long before that anyway). The basic idea of overclocking remains exactly the same today. It is only for those who are willing to take a risk and wear the consequences. If you are going to overclock, remember that it voids your warranty, and don't go too far — you might get away with a 2500 at 2700 MHz, but you'd be mad to try it at 3700. Surf across to any of the many dedicated overclocking web sites for much more detail.

P6: The family of sixth-generation Intel CPUs sharing a common internal design. The Pentium Pro was the first of these, it was followed by the Pentium II, Celeron, Xeon, Pentium III, and Pentium M. The P5 family, as you'd expect, was the previous design: Pentium and Pentium MMX.

PCMCIA, PC Card: Stands for People Can't Memorise Computer Industry Acronyms. (Or something.) Now called PC Cards, these are the credit-card sized multi-purpose cards that fit into notebooks. You can get almost anything in a PCMCIA card: modem, network card, RAM, SCSI adaptor, sound card, even a hard drive. PCMCIA devices tend to cost two or three times as much as their desktop equivalents. The most common types used to be network cards and modems, but these are almost always built into modern notebooks and PCMCIA cards are now uncommon.

Platter: A single hard drive disc. Usually, both sides are used. Most drives have two or three platters, but they can have only one, or as many as a dozen. Platters are traditionally made out of aluminum and coated with a hard magnetic material. Many newer drives use a glass or ceramic base.

POST: Power On Self-Test. The routine that computers put themselves through before they boot: counting the RAM and so on. A 'POST card' is a diagnostic tool that you can plug into a motherboard to help work out why you have nothing on the screen. Back in the days of 12-month warranties, a POST card was an expensive but essential part of the well-equipped computer workshop. We still use them today but much less often because in general a board is either still under warranty or else not worth wasting time on.

Remarking. An unethical and illegal practice that used to be quite common. People used to buy a certain CPU, a Pentium 150 say, and grind off the markings on it, then replace them with fake markings for a higher speed grade. At one time, unless you knew your wholesaler very well, you were wise to always pay the extra for a retail boxed CPU rather than a bulk-shipped tray one, because the retail ones were more difficult to fake. The most commonly remarked CPUs were 486SX-33 (really an SX-25), 486DX/2-66 (a DX/2-50), Intel 486DX/4-100 (really an Intel DX/4-75 or AMD DX/4-100), and Pentium 166 (Pentium 133 or 150). Quite a few others were remarked on a less regular basis. The practice had more or less stopped now, for three reasons: first, most current processors are are clock-locked so that their multiplier is fixed and cannot be changed outside the factory; second, AMD and Intel have switched to more secure chip marking methods; and third, because most CPUs these days are already quite close to their practical limits and remarked ones operating out of spec would be even more unreliable than they used to be in days of old. (Stand fast the 486SX-25 and the Pentium MMX 166, both of which used to nearly always overclock without the slightest trouble.)

RLL (Run Length Limited): The most common data encoding method used by hard drives. It would be logical to expect that each bit of data would be directly recorded onto a hard disc as a small magnetised area: that each tiny one bit data storage area would be magnetised to represent a one or demagnetised to represent a zero. In fact, this turns out to be quite inefficient. It's much easier for the read head to detect a change in magnetisation level (a "flux reversal") than detect the high or low level itself. So it is universal practice to encode data in the pattern of flux reversals: for example, a reversal could stand for a zero, a non-reversal for a one. Alas, this simple scheme is very difficult to implement in practice — the read channel has to work out how many zeros are contained in a string of non-reversals (zeros) by measuring the time that has elapsed since the last reversal — effectively, it has to "close its eyes and count milliseconds". Any slight timing variation (for example in the speed of the spinning disc) results in data corruption. In consequence, NRZ recording (as this method is known) is not used for disc drives. It would be possible to make a reliable Non Return to Zero (NRZ) drive with current technology, but the need for generous timing tolerances would result in very low areal density. Although in theory NRZ gives the best possible efficiency — one bit stored in every reversal — in practice it is a non-starter.

Frequency Modulation (FM) recording has only half the theoretical efficiency of NRZ, but is practical enough to have been used in some very early floppy drives. FM encoding uses one reversal as a clock signal (to keep the read head synchronised) and a second reversal to store data. Thus a one is stored as two reversals in a row, a zero as a reversal followed by a non-reversal.

Modified Frequency Modulation (MFM) was used for hard drives until late in the 1980, and is still used by floppy drives. Like FM it uses clock signals, but only one-quarter as many. Consecutive non-reversals (zeros) have a "clock reversal" placed between them to keep the read channel synchronised.

RLL encoding is more efficient again. RLL (2,7) — the most common form — allows up to seven consecutive non-reversals (zeros) before it inserts a clock reversal. There are various other forms. Notice that it allows more of the space to be used for data but still sets a maximum safety limit to prevent the read head loosing synchronisation with the disc (seven non-reversals, in this example). For any given number of reversals per track, an RLL encoded drive needs to be more accurate than an MFM drive but far less accurate than a NRZ drive would have to be. All modern hard drives use one form or another of RLL encoding.

RTFM. An essential industry term with many uses. It stands, of course, for read the ... um ... manual.

Scalar and Super-Scalar CPUs. A scalar CPU executes one instruction per clock-tick. A 5x86-100, for example, had a 100MHz clock and completed 100 million instructions per second. A sub-scalar CPU takes more than one clock tick to compete each instruction. The old Z-80, for example, averaged about six clock cycles per instruction. A super-scalar CPU completes more than one instruction per clock tick. This is how a Pentium-75 could be faster than a 486-100 — it ticked more slowly but did more than one thing per tick. The traditional champions of super-scalar X86 design were Cyrix. The Cyrix equivalent to Intel's and AMD's 233MHz Pentium II and K6 ran at a mere 188MHz. All new CPUs are super-scaler now: the last scalar X86 CPUs to be manufactured were the very low-priced Centaur C6 and, oddly enough, the Cyrix MediaGX.

Note that in the real world different instructions take a different amount of time to execute. In other words, a given CPU might be able to do two of instruction X per cycle, but only one of instruction Y, and take two or three cycles to process an instruction Z. So the terms "scalar" and "super-scalar" are always approximations.

Second Source. No wise manufacturer will purchase components which are only available from a single supplier. To have a product become successful, it is essential that the customer is able to buy it (or something else very like it) from at least two different manufacturers. What if the main source has a fire, an earthquake, a shortage of materials? Or what if they decide to stop making the part, or their quality and customer service declines, or they put the price up too much? What if they go broke? One of the first things most component manufacturers do when they develop a new technology is licence it to one of their competitors — otherwise no-one will buy it.

Sector Translation PCs usually address the data on a hard drive by specifying the required Cylinder, Head, and Sector. (CHS addressing.) The file system asks for, say, the data on cylinder 344, head 3, sector 9. CHS addressing has maximum limits: 1024 cylinders, 16 heads, and 63 sectors. The parameters of the drive are loaded into the system BIOS when the computer is first set up. The old Seagate ST-225, for example, had 615 cylinders, 4 heads, and 17 sectors per track — 21Mb in all. As drive technology advanced, the CHS limits became a problem. Newer drives had 1500 or 2000 cylinders but only 2 or 4 heads — so it wasn't possible to access half of the drive without special driver software. The solution was sector translation: the drive "lies" to the controller, acting as if it has, say, 10 heads and 1024 cylinders when it actually has 5 heads and 2048 cylinders. As long as the total space is correct, the BIOS can't tell the difference and there is no need for driver software. A more advanced form of sector translation allows drive manufacturers to put extra sectors on the outer tracks (which have more room) but still juggles the extra space back into simple terms that the BIOS can understand. Nearly all drives over about 60Mb use sector translation. Also see LBA.

Short seeks Hard drive performance measurement is perenially controversial. Seek time is always important, of course, but simply measuring the overeall average seek tells us surprisingly little about a drive. Average seek, by definition, measures how quickly the drive moves a wide range of distances, and the "average" tested seek is about one-third of a full-track seek. But in reality, the vast majority of real-world seeks are much smaller than this: the head moves a few tens or hundreds of tracks much more often than it moves a long distance. So a drive that accellerates smartly and settles precisely over the track promptly (i.e., is good at short seeks) is faster in actual use than a drive that does not — even though the two drives can have the exact same "average seek time". Notice how flat the early part of the seek curve is in the chart for the Western Digital 800BB at right.

SIMD (Single Instruction, Multiple Data) A simple idea that is difficult to put into practice. Instead of the programmer having to issue a series of repetitive instructions ("do this to this number, now do this to this other number, now do it to ..."), a SIMD instruction says "do this to all these numbers, one after the other without stopping". It is not much use for the major part of computing, but for certain repetitive mathematical tasks, notably sound and graphics processing, the more powerful SIMD instruction sets like SSE can make a huge difference. Games in particular benefit.

Software. The stuff that reduces your beautiful new hardware to expensive furniture at least once a week. Nobody knows where it really comes from. The majority of software writers take it up because their school results weren't good enough to get them into something useful like law or economics or engineering. The fact that there is some good software about just goes to show that schools make mistakes too.

Stiction A cross between 'stick' and 'friction'. This was a fairly common problem with olderhard drives, particularly if they have been left off for a long time, more so if it's cold. The heads weld themselves to the surface of the disk and the drive won't spin up. A temporary cure is usually possible, but you need to get the data off and replace the drive. (No, you can't open it up and squirt it with WD-40!)

Thin-Film Heads When you place a magnetic material (such as iron) in a magnetic field, the tiny particles tend to line up in the same direction as the field they are in. When you move a wire through a magnetic field, it induces an electrical current in the wire (which is how generators work). All magnetic recording is based on this simple process: video tape, audio tape, floppy discs, and of course, hard discs. (Audio compact discs and CD-ROM drives use a completely different method.)

Inside the hard drive, there are four main components: a magnetic disc, a motor to spin it, a moving read-write head, and some electronics. The head looks rather like an old-fashioned record needle-arm. The hard disc itself is made of a smooth, rigid substance (usually aluminium) and coated with a magnetic material. The data is encoded onto this in the form of magnetic patterns. In the simplest example, all the north poles pointing left can stand for a zero, and an area of poles pointing right can stand for a one. To line the poles up like this, you need a very small, strong magnet that can be switched on and off as the surface of the disc runs past underneath it. The traditional thin-film head is exactly this — a tiny electro-magnet made out of a thin film of silicone semi-conductor. As the surface of the disc spins past it, current is switched on and off rapidly, and parts of the disc become magnetised.

To read the data back again, the head is passed over the surface of the disc with the current switched off. The patterns of magnetisation on the disc induce a current through the head, and this current is amplified and filtered to turn it back into exactly the same data that was written to the disc in the first place. Also see MR Heads.

Vaporware. Non-existent product: it's not hardware, it's not software, it's just vapor dreamed up by the marketing department. Sometimes the actual product is still under development and will appear sooner or later, sometimes it has a fatal flaw and will never appear because no-one can make it work right. Sometimes it was never meant to appear in the first place, it was invented to be just real enough to let your sales team use the prospect of it to torpedo one of your competitors' products. See FUD. There are two or three special categories of vaporware which have been dreamed up to combat the public's increased awareness of it, these include 'condensationware', which is where the vapor turns into a thin, wet film of real product to disguise the fact that it was really vapor all along - the Pentium Overdrive is a classic example - and the ever-more popular practice of announcing products long before you have any reasonable hope of actually making them.

VESA Technically, VESA is the international association of video card manufacturers. In practice there are two completely different things we mean when we talk about a VESA video card. Most often, it refers to the VESA local bus (VLB), a fast 32-bit extension of the slow old 16-bit ISA bus. The VLB was replaced by PCI, which offered equal speed with less complication.

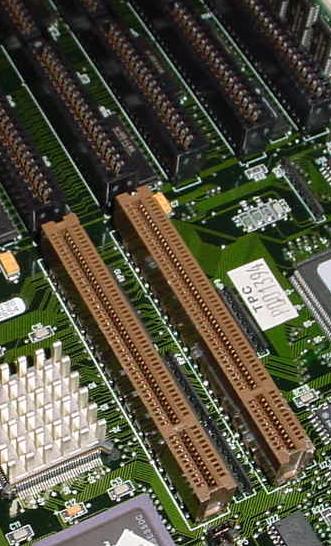

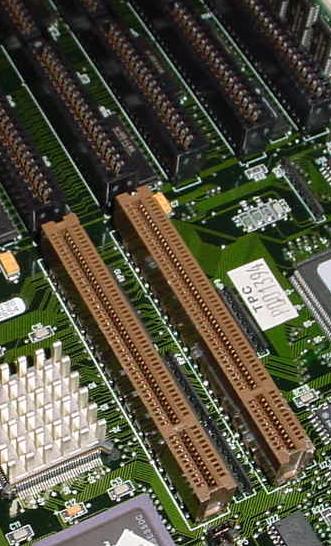

→ Illustration: the twin VESA slots on an IBM Blue Lightning main board. Notice that they form an extension of the ISA slots: so far as the raw system was concerned, VESA video and I/O cards were ISA cards until the boot sequence was complete; it was only when the driver software finished loading that data transfer switched over to the faster 32-bit bus.

Until PCI came of age in about 1996, most good 486 machines used VESA local bus video and I/O cards. They are easy to recognise because they are much longer than other cards. (Early 486s were limited to 16-bit ISA or the very rare and expensive EISA bus. Late models switcthed to PCI, when Intel finally got the bugs out of it. Technically, VLB was a short-term kludge, and VLB boards were always prone to local bus problems.

The VESA video software standard is completely different. All modern video chipsets adhere to it. In the old days, every different video card understood the standard 16-color VGA instructions, but went its own way after that for Super VGA. So if you were a programmer writing a game, say, and you wanted more than 640 by 480 at 16 colors, you had to pick a video card and write for that particular card only. You might start with a Tseng ET-4000, then re-write for Trident, re-write again for Cirrus Logic, and S3, and Oak, and Avance Logic, and so on. If you were a games player then you'd often have a lot of trouble getting things to run — especially if your video card was an uncommon one, a Realtech or Western Digital, say. The VESA software standard changed all that by setting a single standard. The list of VESA instructions is understood by all VESA-compatible video cards, and provided your program supports VESA, it should run without having to know or care which chipset you have. Most cards had VESA built-in to the video BIOS, but some required you to load a small program off disc instead.

Voice coil. Floppy drives and older hard drives use stepper motors for head positioning. The head starts at the outside (track zero) and steps in the required number of times to find the desired track. On a hard drive this can mean 1000 steps or more. Although fairly cheap to manufacture, stepper drives are slow and prone to inaccuracy — particularly because of thermal expansion and contraction of the aluminium disc in cold climates. A stepper drive head has no way of knowing where on the disc it is; if it gets "lost" it has to step outwards several hundred times to be sure of reaching track zero, then count its way back in again. This is the cause of the distinctive knocking sound stepper drives make more and more often as they gradually start to fail — the heads banging up against the safety stops as they try to seek back past track zero.

Voice coil head actuators are faster and less accurate than steppers — but a voice coil drive has positioning information encoded on it (eg. "this is track 212"), so that the head can seek to somewhere near the right track, do a test read, and then make fine adjustments to get to the exact place. Because the positioning information is on the disc itself, thermal expansion and contraction have no effect on accuracy. Originally, voice coil drives had to have a dedicated servo head and "wasted" one disc surface for positioning information — this is why voice coil drives always used to have an odd number of heads. Since about 1995, all new drives have avoided this by embedding the positioning signals next to the data.

X86 The general term used to refer to the long-lived and varied family of CPUs tracing their heritage back to the Intel 8086. Prominent examples are Intel's own 8088, 286, 386, 486, and Pentium family, and various CPUs from other designers, notably the Cyrix 5x86, 6x86 and 6x86MX, and the AMD K-5, K6 and Athlon series. While the X86 family makes up only a small and architecturally limited fraction of all CPU designs, the many X86 variants between them account for the vast majority of computer CPUs made. (Note that a great number of specialised non-X86 CPUs are used in non-computer applications: telecommunications, laser printers, robotics, industrial control devices, and so on.)

Yield The ratio of successful to non-successful manufacturing results. Mostly used in relation to semi-conductor fabrication, particularly CPUs. Imagine you make CPUs. Your silicone wafer is (say) 10 inches square, and it costs $100 to make. If your individual chips are 1 inch square, you get 100 chips per wafer before testing. If you have 7 random defects per 100, then your yield is 93%, and you have to sell the chips for about $1.06 each to break even. Now, imagine that you introduce a big new chip and make it on the same production line. It is 2 inches square. You now only get 25 chips per wafer — but you still get 7 defects (on average). This leaves you with 19 good chips, a yield of 76%, and you need to sell them for $5.27 each to cover your costs. Notice how the per-chip cost is much higher. This is why the Pentium Pro is so expensive, and so rare. Also, notice that to keep on making the same number of chips, you need five times as many wafers, so you probably have to build four extra fab plants — at a mere couple of billion dollars each. Did you notice that we have calculated that 25-7=19? Where did the extra defect go? With average luck, it will be on one of the 7 chips we have already discarded. This can happen with small chips too, but it is more likely with larger ones. Now imagine a massive 5-inch chip. Four of these fit on each wafer, and the yield goes down to almost nothing. Your break-even price will be maybe $500 each. This is why big TFT notebook computer screens are so astronomically expensive. It's not so much that they are costly to make, it's just that the manufacturers have to throw so many away.

The illustration above is an 8080 wafer. You can just see the individual chips in it. AMD and IBM both have good pages describing the manufacture of silicone wafers. The IBM Microelectronics page How a Chip is Made is readable and well illustrated. AMD's gives a good sense of the excitement of developing high-technology right at the leading edge. See more detail here.