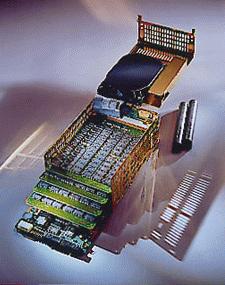

Microsolutions Backpack

These were a wonderful idea. The Backpack hard drive was an external unit that plugged into the printer port. Inside the box was a stock-standard IDE drive from one of the major makers.

But why not just use USB? Because in those days there was no USB — USB didn't exist at all until 1996, didn't become common until after 1998, and wasn't actually useful, trouble-free and practical before about 2000 or 2001. (For years, technicians used to joke that USB stood for "Useless Serial Bus.") Backpacks were around long before that. Our first one had an 80MB Conner drive inside — that dates it to about 1992.

The Backpack device driver needed only a single line added to your CONFIG.SYS, consumed less than 5k, and it could be loaded high. In the days when we used Backpacks, they ran about as fast as a standard 80MB or 512MB internal IDE drive (about 1MB per second transfer rate), and it took less than 30 seconds to fit one. Unlike Zip Drives, they were real hard drives with typical hard drive seek performance — about 12ms back then. You could even daisy-chain two or three together or keep your printer as well. The Red Hill workshop ran on Backpacks for years. We had four in all, each one bigger than the last, and before we got our first CD burner we used them all day, every day.

Microsolutions were still in business and still making Backpack drives until early in the new century. They also made a wide range of external floppies, tape drives, and CD-ROM drives, which we used to use for old CD-less notebooks sometimes.

Quantum Bigfoot

The Bigfoot family of drives was intended to provide reasonably fast, reliable storage at the lowest possible cost per megabyte. They did this by using a 5.25 inch form factor — the same size as a CD-ROM drive or old-style floppy. But don't get the idea these were low-tech. The larger 5.25 inch platters allowed more data to be stored than was possible on a standard 3.5 inch disc, and despite the slow 3600 RPM spin rate, the actual speed at which data passed under the read head was very good — again, because of the increased circumference. The result is that Bigfoot drives used to have excellent data transfer rates, faster than most 4500 RPM 3.5 inch drives, and they cost less to make.

There were, however, some significant performance penalties from the large form factor. Average seek time for a typical Bigfooot was only fair at between 12 and 14 milliseconds. (But nevertheless an excellent achievement for such a big drive.) More importantly, the latency was very high. Latency is the time (on average) that a drive spends waiting for the required data to pass under the read head once the head has been positioned over the correct track. If you think about it, it is obvious that latency is directly related to drive RPM. A drive's actual access time is composed of three things: a small amount of command overhead ("thinking time"), the seek time (as the head moves to the correct track), and the latency (as the head waits for the correct sector to rotate under it). We don't usually quote latency figures as they are directly related to spin rate and don't vary for any given RPM. (The faster the spin rate, the lower the latency.)

Consider the performance figures for some representative 4GB drives. Notice how poorly the Bigfoot compares:

| Model | Diameter | Spin Rate | Seek time | + Latency | = Access time |

|---|---|---|---|---|---|

| Quantum Bigfoot 4.3 | 5.25 inch | 3600 RPM | 14ms | 8.3ms | 22.3ms |

| Seagate Medalist 4.3 | 3.5 inch | 4500 RPM | 12ms | 6.7ms | 18.7ms |

| IBM Deskstar 4 | 3.5 inch | 5400 RPM | 9.5ms | 5.6ms | 15.1ms |

| Western Digital 4360 | 3.5 inch | 7200 RPM | 8.0ms | 4.1ms | 12.1ms |

| Seagate Cheetah 4.5 | 3.5 inch | 10,000 RPM | 5.2ms | 3.0ms | 8.2ms |

Looking at the right-most column in the table, notice how much difference there is in the overall access times. The two SCSI drives (the WD and the Cheetah) had double the performance, but cost almost $1000 more than an IDE unit. However, the 3.5 inch Medalist was only about $30 more than a Bigfoot, and noticeably faster, and another $30 got you a much faster 5400 RPM IDE like the Deskstar 4 (or Quantum's own Fireball ST for that matter).

The Bigfoot theory is certainly interesting, and Quantum did a great job developing these drives as far as they did, but they really only made sense where cost was much more important than speed. If you were spending two or three thousand dollars on a new computer system, you were wise to do yourself a favour and specify a 5400 RPM 3.5 inch drive. We never sold them new but we used to see Bigfoot drives in the workshop from time to time, and they were always quite noticeably slower than 3.5 inch drives. Incredibly. we once saw a Bigfoot factory fitted to a top of the range Pentium Pro system. This was the moral equivalent of putting four-inch budget tyres on a V8. (It ran more like a Pentium-90 than a Pro, and Hewlett-Packard should have been ashamed of themselves!)

Quantum used to have an easy to read and very interesting white paper on 5.25 inch drive performance, which is doubtless gone by now. It was interesting in two ways. Firstly they pointed out a non-obvious advantage to the larger form: although it has higher latency and slower seek, with more data per track the 5.25 inch drive has to switch heads and seek less often. Secondly, they applied this with a transparently self-serving example: comparing a 2.5GB Bigfoot with a long-obsolete 4500 RPM 850MB 3.5 inch Trailblazer. For a fair comparison, they should have used a (then current) Fireball ST or TM — even the budget model 4500 RPM Fireball TM was easily faster. So read with care!

Here at Red Hill, we always used 3.5 inch drives for everything, even the entry level. The few dollars we could have saved by using Bigfoot drives for our lowest-priced systems simply didn't justify the performance hit.

Iomega Zip Drive

Removable-media hard drives have been tried many times over the years, never with a great deal of success. Iomega's Zip Drive, however, became quite popular for a couple of years. For a few hundred dollars you could get an external parallel port device that took 100MB removable hard drive cartridges that looked just like overgrown floppy discs, and you could use as many as you like. In those days, these were considered great things for backup. (Tape drives were such a pain!)

The performance wasn't bad, but the cost per megabyte was quite high — about three times more expensive than a conventional hard drive. A Zip drive's data transfer rate was quite respectable at around 1MB per second, but its 29ms seek time was very slow by hard drive standards. (Most of the 40MB drives fitted to old 286 systems were about this speed. On a Pentium, 29ms was glacial.)

In the early days, Zip Drive owners seemed to love them. We were not all that keen on them. We tried them for a while, and couldn't really find anything to fault, but they never become one of our daily tools. Perhaps it was because 100MB at a time was quite limiting when you were used to working with a gigabyte or two. Perhaps it was because our Backpack hard drives were so good. Or perhaps we just didn't use them enough to get the habit.

There have been a host of other, broadly similar products: Iomega's Jazz drive stored about a gigabyte per disc, and Syquest made a range of well-respected removable-media hard drives for some years. On the whole, we lean towards using a real hard drive, because it's cheaper, more reliable, and much faster, or else a CD burner, which is relatively slow and clumsy but much cheaper per megabyte.

Later on Zip Drives became available in a 250MB variant, and in USB or as an internal IDE or SCSI unit. In the end though, much as there is a need to replace the venerable 1.44MB floppy drive, Zip drives are just too small, too slow, too unreliable, and far too expensive to operate. They seem to be fading away off the market now, and that is no bad thing.

SDX Drives

SDX stood for Storage Data Acceleration. It had little to do with hard drives a such. Rather, it was a proposed different way of attaching a CD-ROM drive. First, some background.

The standard way of attaching a CD drive to a PC is with a dedicated bus: early models (mostly single and twin speed) used one of three proprietary bus connections: Panasonic, Mitsumi, or Sony. SCSI was, and still is, another effective though expensive option. More recent CD-ROM drives, however, pretty much all used the ATA bus, better known as IDE. IDE CDs were fast, inexpensive, and easy to configure. For many years, all PCs had two IDE controllers, each of which could suport two IDE devices: typically hard drives and a CD-ROM drive, though tape drives and CD writers are also available in IDE. (DVD drives came later, but the same applied.)

The CD drive was usually configured as a stand-alone device on the second IDE controller, or else slaved to the hard drive on the primary IDE controller. Generally, it didn't much matter, though slaved CD drives could sometimes impair the performance of your hard drive. Performance was good: even the old ATA-33 maximum IDE data transfer rate of 33 MB a second was plenty high enough to cope with a CD drive, and the later 100MB/sec ATA-100 standard leaft plenty of headroom for future improvement as we moved towards the theoretical maximum in CD speed.

Western Digital's SDX proposal was quite different: an SDX CD-ROM would be designed to attach to an SDX hard drive, which is attached in turn to the standard IDE controller. It is not an IDE slave device, SDX used a different connection altogether; with a 10-pin cable instead of the 40-pin IDE cable.

An SDX hard drive was proposed to automatically cache the relatively slow CD-ROM drive, leveraging the hard drive's much faster seek time and somewhat faster data transfer rate to produce faster CD-ROM performance. This was the main point of SDX — hard drive CD cache.

Cache can make a huge difference to CD performance. RAM caching of the CD drive has been around since the very early days of double-speed drives, and is a standard part of all major operating systems. The old DOS Smart Drive, for example, automatically used a small amount of RAM to cache the CD drive as well as the hard drive. (Provided that you loaded SMARTDRV after MSCDEX, of course.) However, while RAM was very fast — roughly 2000 times faster than a CD-ROM drive — the maximum sensible size of a RAM-based cache in those days was only a few MB, and CD discs are around 650MB each.

A much more practical idea was to use the hard drive to cache the CD drive. Mid-1990s hard drive were much slower than RAM, but still about 10 times faster than a CD-ROM. Even then, the common hard drives were so large that a setting aside a gigabyte or so for a CD cache was usually practical. This is what SDX was all about. An SDX hard drive was designed to cache any SDX CD-ROM drives attached to it. In round figures, SDX could double the performance of the CD drive, and do it without using any extra CPU or PCI resources, and without adding yet another layer to already-fragile file systems.

On the other hand, there was no need at all to use a new and non-standard type of CD-ROM drive in order to gain the benefits of hard drive CD cache. It could easily be done in software, with performance gains equal to or better than SDX. Even back when SDX was being proposed there were several software CD caches on the market, and they worked with any type of CD-ROM: old or new, IDE or SCSI. (Even old double-speed Panasonic, Mitsumi and Sony interface drives.) Quarterdeck, famous for Desqview and QEMM, made one, as did Micro Design. (Micro Design's old Super PC-Kwik was far and away the best hard drive cache program for DOS-Windows, so their CD one ought to have been be pretty good too.) CD cache software was much easier to upgrade than hard drive-based firmware, and offered much more scope for customisation to individual CD titles — to the point of "knowing" which scene comes next in a top-selling game, and pre-loading it for instant action. (This, by the way, is where those cheap '100x' CD-ROM drives of the late '90s came from: they were just standard 32 speed drives with bundled cache software.)

| Advantages of SDX | Advantages of software |

|---|---|

|

|

| Disadvantages of SDX | Disadvantages of software |

|

|

| Advantages common to both SDX and software | |

| |

To be a success, SDX had to be adopted by other hard drive manufacturers, not just Western Digital. WD shot themselves in the foot on this. They claimed that they intended to make the SDX interface an open standard, like IDE or SCSI, but they did not do it in time. Other hard drive makers, notably Maxtor, regarded SDX as proprietary technology and complained that Western Digital were asking for license fees — hardly the sign of an open standard. (Contrast this with Quantum's Ultra ATA technology, which was licensed free of charge to all.)

In reality, we suspect that WD were trying to have it both ways: use the other makers' support to establish SDX as the new standard, but only after WD had had a nice revenue-boosting period as the only drive manufacturer with shipping SDX product. Sort of 'let's have an open standard but not yet'. After Oak (the major CD-ROM chipset manufacturer) and four out of the five major hard drive manufacturers all slammed SDX in a rather messy public brawl at the end of 1997, Western Digital quietly forgot about it and their announced SDX drives never shipped.

The documents have long since disappeared, but for the record, Western Digital's SDX announcement and related documents were here: the other makers' reply was posted in three or four places, this example was at Maxtor, as was the related white paper.

Western Digital Portfolio

Western Digital got a bit of a yen for odd-ball ideas in the mid Nineties. The Portfolio range introduced a new and non-standard form-factor for notebook drives — 3 inches, rather than the normal 2.5. At first sight, it seemed like a silly idea. Why make a notebook drive bigger? Surely the idea of a notebook drive is to make it as small and light as possible? Indeed it is — but Western Digital said they had come up with a way to get more storage in less space using a bigger drive. Here's why:

The trend in notebook design was towards larger, flatter forms. This was driven by the ever-increasing size of notebook screens. "Thin flat" was in. The 3 inch Portfolio drive followed in the same direction. Increasing the platter by a half-inch allowed up to 70% more storage per disc. This meant that a very thin, single-platter drive could be used where two platters would be required for an equivalent 2.5 inch drive. (Sound a bit like a Quantum Bigfoot on a tine scale? It's a similar idea.) By having a slightly bigger platter, WD got double the storage per cubic inch. (Note: cubic inch, not square inch.) In addition, the bigger disc was easier to spin faster (for performance), and could share many components with 3.5 inch desktop drives, which made it cheaper to manufacture.

Contrast this approach with that of IBM (then and now the world leader in notebook drives, though under the Hitachi banner since 2003). IBM stuck to the standard 2.5 inch form, and continued to raise the bar with ever-increasing speed and capacity, mostly via incredibly high areal density. To do this, IBM used ultra-high technology: MRX and GMR heads, glass platters, advanced PRML, high spin rates, and clever formatting techniques. Of course, with 40% of the notebook hard drive market, IBM could afford the research and manufacturing overheads, and the technology flowed through to their desktop and server drives as well.

The 3 inch form was, on the other hand, ideal for Western Digital. It was innovative (which they liked), it didn't require MR heads or particularly high areal density (which were not WD strengths), it was cost-effective, and it gave WD a way to break into a part of the market they were not traditionally strong in. Several leading notebook vendors adopted the 3 inch form, and it looked to have a good chance of catching on.

The flagship of the Portfolio range had a very good set of specifications at the time: two platters, 2.1GB, 300/100g shock resistance, MR heads and PRML, 14ms seek time, 4000 RPM, and 83Mbit/second transfer rate. This compared well with all but the very top end of IBM's notebook range, and was superior to just about everything else around.

However, in January 1998 a financially troubled WD dropped the Portfolio range in order to concentrate on core business: 3.5 inch drives for the desktop market. JTS, the only other maker of 3 inch drives, did likewise. On the whole, we thought Western Digital's other late-nineties innovation, SDX, was a bad idea. But the demise of the Portfolio form factor seems a pity. It had great promise and it's a shame that one or another of the other major manufacturers didn't take it up. 1997 was a very bad year for the hard drive makers, with oversupply problems, slack demand and poor margins; they all lost money and WD dropped the Portfolio range because of funding issues, not lack of technical merit. It had looked set to make quite a difference.

Seagate Barracuda 2HP

This Seagate drive, long out of production now, used an almost unique approach to high performance. Although similar in most respects to the then-current range of high-speed Barracuda drives, it used two heads in parallel to double data transfer rates.

Almost all hard drives have more than one read-write head; four and six-head drives are common, and a dozen or more used not to be unusual. But only one head is used at any one time, and the maximum data transfer rate is governed by the amount of data passing under the head. The Barracuda 2HP, however, was able to split the data stream up into two halves, sending one half to each of two heads, and recombine the data when reading it back. In an era when the best 7200 RPM drives were capable of 50 or 60 Mbit/second transfer rates, the Barracuda 2HP managed 113Mbit/second. It was easily the fastest hard drive on the planet.

This was not a new idea, but it was the first, and so far the only implementation of it in a mainstream drive. Imprimis (Control Data's storage division, now part of Seagate) had produced a series of multiple-head parallel drives for supercomputers starting as early as 1987.

So why has 2HP technology disappeared now?

First, it's possible to achieve the same results by combining several individual drives in a RAID array, letting the RAID controller card or controller software perform the complex task of splitting and recombining data. RAID has become dramatically cheaper over the past few years, and is commonplace in high-end server systems.

Secondly, time to market. New drive models are coming onto the market more and more rapidly. By the time a design team has taken an existing high-speed drive and spent a lot of money developing a twin-head parallel version of it, the next generation of single-head drives are nearly ready — and they will equal the performance of the 2HP drive at much lower cost simply through increased spin rates and ever-higher areal density.

In short, 2HP drives will not have another chance to become cost and performance competitive unless there is a major slow-down in areal density increases — currently running at 60 percent a year. Until then, the Barracuda 2HP will remain one of a kind.

| Barracuda 2HP | Performance | 1.18 | |

| Data rate | 113 Mbit/sec | Spin rate | 7200 RPM |

| Seek time | 8.0ms | Buffer | 1MB |

| Platter capacity | 205MB | Interface | Fast Wide SCSI 2 |

| ST-12450W | 1.85GB | 18 data heads (19 total) | 10 platters |

Quantum Solid State Drives

Various makers, notably Quantum (now part of Maxtor) make a fairly extensive range of solid state disc drives — that is, drives with no moving parts at all. Essentially, they are a hard drive-shaped box full of RAM chips with a SCSI connector bolted on. The cost (of course) is astronomical, and the performance is phenomenal.

There are both volatile and non-volatile versions in sizes up to several gigabyte. Access times are near enough to zero as makes no difference, and data transfer rates are limited only by the SCSI bus.

No, we don't sell them. We don't know anyone that could afford one!

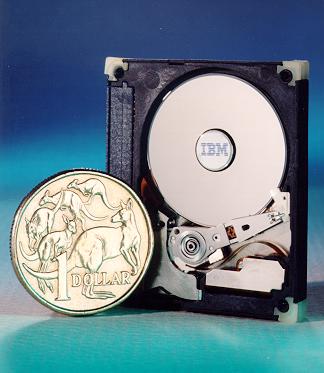

IBM Microdrive

These are an amazing piece of IBM high technology in real life. The Microdrives are one inch across—easily smaller than a PCMCIA device—but they are real hard drives, with moving read heads and a tiny one-inch disc spinning at an incredible 4500 RPM. The two release models stored 170 and 360MB, and their performance was similar to a typical 720MB desktop drive of just a few years before. Doubtless there are newer versions now, which should be easy enough to look up on the IBM website if you are interested.

The 170MB version was, so far as we know, unique in that it has only one head. Up until the areal density explosion at the turn of the century, no drive had ever had a single head. (If you have ever heard of another commercial hard drive with just one head before about 1998 or so, we'd love to know about it.)

They are unlikely to play a big role in the computer world. There is a minimum practical size for the human-interface components like keyboard and screen, and that means that the traditional 2.5 inch notebook drive will be very hard to replace. Microdrives will only replace notebook drives if they are faster and store more data at less cost, and that is not likely to happen anytime soon—possibly not ever.

But they are becoming an essential part of a huge range of electronic products: think of digital cameras, PDAs, video recorders, maybe even mobile phones. The possibilities are endless, and the chief competing technology is not traditional rotating magnetic storage but silicon-based flash RAM.

| IBM Microdrive | ||||||

|---|---|---|---|---|---|---|

| Model | Capacity | Heads | RPM | Seek | Data Rate | Performance |

| DMDM-10170 | 170MB | 1 GMR | 4500 | 15 | 49 | 0.76 |

| DMDM-10340 | 340MB | 2 GMR | 4500 | 15 | 49 | 0.76 |

| DSCM-10340 | 340MB | 1 GMR | 3600 | 12 | 59.9 | 0.79 |

| DMDM-10512 | 512MB | 1 GMR | 3600 | 12 | 59.9 | 0.79 |

| DMDM-101000 | 1GB | 2 GMR | 3600 | 12 | 59.9 | 0.79 |